Install TensorFlow 2

TensorFlow is tested and supported on the following 64-bit systems:

|

|

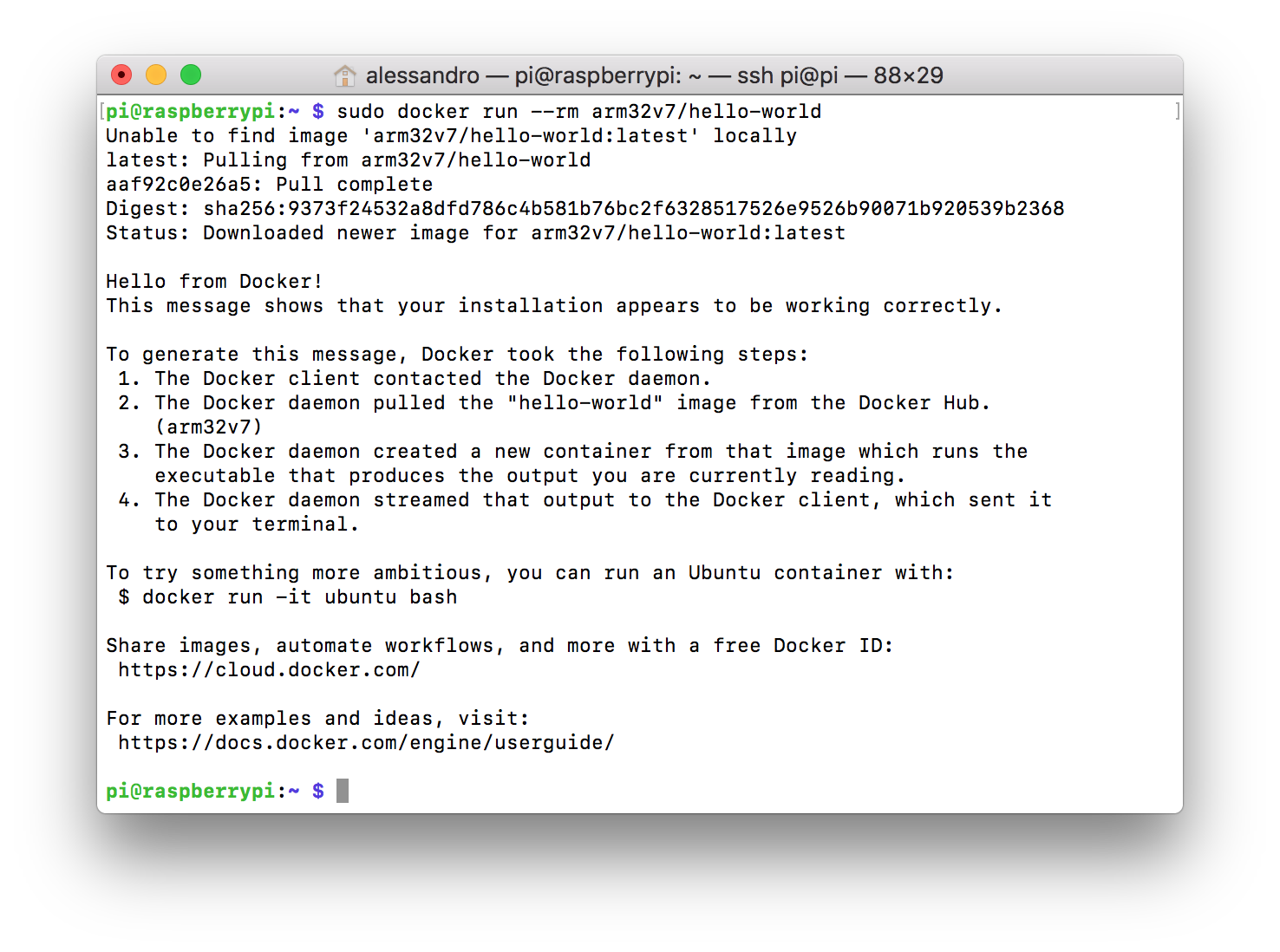

The first step is to install Docker Desktop for Windows or Mac. After downloading the package, install it either by dragging the whale icon into your Applications folder on Mac or clicking though the installer on Windows. Docker Desktop for Windows requires Microsoft Hyper-V to run. Install docker on your Linux distribution. Choose your Linux distribution to get detailed installation instructions. If yours is not shown. Read the Docker install guide Google Colab: An easy way to learn and use TensorFlow No install necessary—run the TensorFlow tutorials directly in the browser with Colaboratory, a Google research project created to help disseminate machine learning education and research. I had great use of Docker even in my personal RPI projects, as Python modules can be difficult to get compiled and in a usable state on raw Raspbian. In projects at work I personally only recommend Docker when we need Hyper-V isolation or K8s is hosting the product (these are.NET projects, which work really well when published as self. After you installed Docker on your machine, you can use them via: $ docker pull mxnet/python:gpu # Use sudo if you skip Step 2. You can list docker images to see if mxnet/python docker image pull was successful. $ docker images # Use sudo if you skip Step 2 REPOSITORY TAG IMAGE ID CREATED SIZE mxnet/python gpu 493b2683c269 3 weeks ago 4.77 GB.

Google Colab: An easy way to learn and use TensorFlow

No install necessary—run the TensorFlow tutorials directly in the browser with Colaboratory, a Google research project created to help disseminate machine learning education and research. It's a Jupyter notebook environment that requires no setup to use and runs entirely in the cloud. Read the blog post.

Web developers

Mobile developers

Build and install Apache MXNet (incubating) from source

To build and install MXNet from the official Apache Software Foundation signed source code please follow our Building From Source guide.

The signed source releases are available here

Platform and use-case specific instructions for using MXNet

Please indicate your preferred configuration below to see specific instructions.

WARNING: the following PyPI package names are provided for your convenience butthey point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. The packages linked here contain GPL GCCRuntime Library components. Like all Apache Releases, the official Apache MXNet(incubating) releases consist of source code only and are found at the Downloadpage.

Run the following command:

You can then validate your MXNet installation.

NOTES:

mxnet-cu101 means the package is built with CUDA/cuDNN and the CUDA version is10.1.

All MKL pip packages are experimental prior to version 1.3.0.

WARNING: the following links and names of binary distributions are provided foryour convenience but they point to packages that are not provided nor endorsedby the Apache Software Foundation. As such, they might contain softwarecomponents with more restrictive licenses than the Apache License and you’llneed to decide whether they are appropriate for your usage. Like all ApacheReleases, the official Apache MXNet (incubating) releases consist of source codeonly and are found atthe Download page.

Docker images with MXNet are available at DockerHub.After you installed Docker on your machine, you can use them via:

You can list docker images to see if mxnet/python docker image pull was successful.

You can then validate the installation.

Please follow the build from source instructions linked above.

WARNING: the following PyPI package names are provided for your convenience butthey point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. The packages linked here containproprietary parts of the NVidia CUDA SDK and GPL GCC Runtime Library components.Like all Apache Releases, the official Apache MXNet (incubating) releasesconsist of source code only and are found at the Downloadpage.

Run the following command:

You can then validate your MXNet installation.

NOTES:

mxnet-cu101 means the package is built with CUDA/cuDNN and the CUDA version is10.1.

All MKL pip packages are experimental prior to version 1.3.0.

CUDA should be installed first.

Important: Make sure your installed CUDA version matches the CUDA version in the pip package.

Check your CUDA version with the following command:

You can either upgrade your CUDA install or install the MXNet package that supports your CUDA version.

WARNING: the following links and names of binary distributions are provided foryour convenience but they point to packages that are not provided nor endorsedby the Apache Software Foundation. As such, they might contain softwarecomponents with more restrictive licenses than the Apache License and you’llneed to decide whether they are appropriate for your usage. Like all ApacheReleases, the official Apache MXNet (incubating) releases consist of source codeonly and are found atthe Download page.

Docker images with MXNet are available at DockerHub.

Please follow the NVidia Docker installationinstructions to enable the usageof GPUs from the docker containers.

After you installed Docker on your machine, you can use them via:

You can list docker images to see if mxnet/python docker image pull was successful.

You can then validate the installation.

Please follow the build from source instructions linked above.

You will need to R v3.4.4+ and build MXNet from source. Please follow theinstructions linked above.

You can use the Maven packages defined in the following dependency to include MXNet in your Javaproject. The Java API is provided as a subset of the Scala API and is intended for inference only.Please refer to the MXNet-Java setup guide for a detailed set ofinstructions to help you with the setup process.

You can use the Maven packages defined in the following dependency to include MXNet in your Clojureproject. To maximize leverage, the Clojure package has been built on the existing Scala package. Pleaserefer to the MXNet-Scala setup guide for a detailed set of instructionsto help you with the setup process that is required to use the Clojure dependency.

Previously available binaries distributed via Maven have been removed as theyredistributed Category-X binaries in violation of Apache Software Foundation(ASF) policies.

At this point in time, no third-party binary Java packages are available. Pleasefollow the build from source instructions linked above.

Please follow the build from source instructions linked above.

Please follow the build from source instructions linked above.

To use the C++ package, build from source the USE_CPP_PACKAGE=1 option. Pleaserefer to the build from source instructions linked above.

WARNING: the following PyPI package names are provided for your convenience butthey point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. The packages linked here contain GPL GCCRuntime Library components. Like all Apache Releases, the official Apache MXNet(incubating) releases consist of source code only and are found at the Downloadpage.

Run the following command:

You can then validate your MXNet installation.

NOTES:

mxnet-cu101 means the package is built with CUDA/cuDNN and the CUDA version is10.1.

All MKL pip packages are experimental prior to version 1.3.0.

WARNING: the following links and names of binary distributions are provided foryour convenience but they point to packages that are not provided nor endorsedby the Apache Software Foundation. As such, they might contain softwarecomponents with more restrictive licenses than the Apache License and you’llneed to decide whether they are appropriate for your usage. Like all ApacheReleases, the official Apache MXNet (incubating) releases consist of source codeonly and are found atthe Download page.

Docker images with MXNet are available at DockerHub.After you installed Docker on your machine, you can use them via:

You can list docker images to see if mxnet/python docker image pull was successful.

You can then validate the installation.

Please follow the build from source instructions linked above.

Please follow the build from source instructions linked above.

You will need to R v3.4.4+ and build MXNet from source. Please follow theinstructions linked above.

You can use the Maven packages defined in the following dependency to include MXNet in your Javaproject. The Java API is provided as a subset of the Scala API and is intended for inference only.Please refer to the MXNet-Java setup guide for a detailed set ofinstructions to help you with the setup process.

You can use the Maven packages defined in the following dependency to include MXNet in your Clojureproject. To maximize leverage, the Clojure package has been built on the existing Scala package. Pleaserefer to the MXNet-Scala setup guide for a detailed set of instructionsto help you with the setup process that is required to use the Clojure dependency.

Previously available binaries distributed via Maven have been removed as theyredistributed Category-X binaries in violation of Apache Software Foundation(ASF) policies.

At this point in time, no third-party binary Java packages are available. Pleasefollow the build from source instructions linked above.

Please follow the build from source instructions linked above.

Please follow the build from source instructions linked above.

To use the C++ package, build from source the USE_CPP_PACKAGE=1 option. Pleaserefer to the build from source instructions linked above.

WARNING: the following PyPI package names are provided for your convenience butthey point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. The packages linked here contain GPL GCCRuntime Library components. Like all Apache Releases, the official Apache MXNet(incubating) releases consist of source code only and are found at the Downloadpage.

Run the following command:

You can then validate your MXNet installation.

NOTES:

mxnet-cu101 means the package is built with CUDA/cuDNN and the CUDA version is10.1.

All MKL pip packages are experimental prior to version 1.3.0.

Please follow the build from source instructions linked above.

WARNING: the following PyPI package names are provided for your convenience butthey point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. The packages linked here containproprietary parts of the NVidia CUDA SDK and GPL GCC Runtime Library components.Like all Apache Releases, the official Apache MXNet (incubating) releasesconsist of source code only and are found at the Downloadpage.

Run the following command:

You can then validate your MXNet installation.

NOTES:

mxnet-cu101 means the package is built with CUDA/cuDNN and the CUDA version is10.1.

All MKL pip packages are experimental prior to version 1.3.0.

CUDA should be installed first.

Important: Make sure your installed CUDA version matches the CUDA version in the pip package.

Check your CUDA version with the following command:

You can either upgrade your CUDA install or install the MXNet package that supports your CUDA version.

Please follow the build from source instructions linked above.

You will need to R v3.4.4+ and build MXNet from source. Please follow theinstructions linked above.

You can use the Maven packages defined in the following dependency to include MXNet in your Javaproject. The Java API is provided as a subset of the Scala API and is intended for inference only.Please refer to the MXNet-Java setup guide for a detailed set ofinstructions to help you with the setup process.

You can use the Maven packages defined in the following dependency to include MXNet in your Clojureproject. To maximize leverage, the Clojure package has been built on the existing Scala package. Pleaserefer to the MXNet-Scala setup guide for a detailed set of instructionsto help you with the setup process that is required to use the Clojure dependency.

Previously available binaries distributed via Maven have been removed as theyredistributed Category-X binaries in violation of Apache Software Foundation(ASF) policies.

At this point in time, no third-party binary Java packages are available. Pleasefollow the build from source instructions linked above.

Please follow the build from source instructions linked above.

Please follow the build from source instructions linked above.

To use the C++ package, build from source the USE_CPP_PACKAGE=1 option. Pleaserefer to the build from source instructions linked above.

MXNet is available on several cloud providers with GPU support. You can alsofind GPU/CPU-hybrid support for use cases like scalable inference, or evenfractional GPU support with AWS Elastic Inference.

WARNING: the following cloud provider packages are provided for your conveniencebut they point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. Like all Apache Releases, the officialApache MXNet (incubating) releases consist of source code only and are found atthe Download page.

- Alibaba

- Amazon Web Services

- Amazon SageMaker - Managed training and deployment ofMXNet models

- AWS Deep Learning AMI - PreinstalledConda environmentsfor Python 2 or 3 with MXNet, CUDA, cuDNN, MKL-DNN, and AWS Elastic Inference

- Dynamic Training onAWS -experimental manual EC2 setup or semi-automated CloudFormation setup

- Google Cloud Platform

- Microsoft Azure

- Oracle Cloud

All NVIDIA VMs use the NVIDIA MXNet Dockercontainer.Follow the container usageinstructions found inNVIDIA’s container repository.

MXNet should work on any cloud provider’s CPU-only instances. Follow the Pythonpip install instructions, Docker instructions, or try the following preinstalledoption.

WARNING: the following cloud provider packages are provided for your conveniencebut they point to packages that are not provided nor endorsed by the ApacheSoftware Foundation. As such, they might contain software components with morerestrictive licenses than the Apache License and you’ll need to decide whetherthey are appropriate for your usage. Like all Apache Releases, the officialApache MXNet (incubating) releases consist of source code only and are found atthe Download page.

- Amazon Web Services

- AWS Deep Learning AMI - PreinstalledConda environmentsfor Python 2 or 3 with MXNet and MKL-DNN.

MXNet supports the Debian based Raspbian ARM based operating system so you can run MXNet onRaspberry Pi 3Bdevices.

These instructions will walk through how to build MXNet for the Raspberry Pi and install thePython bindingsfor the library.

You can do a dockerized cross compilation build on your local machine or a native buildon-device.

The complete MXNet library and its requirements can take almost 200MB of RAM, and loadinglarge models withthe library can take over 1GB of RAM. Because of this, we recommend running MXNet on theRaspberry Pi 3 oran equivalent device that has more than 1 GB of RAM and a Secure Digital (SD) card that hasat least 4 GB offree memory.

Quick installation

You can use this pre-built Pythonwheelon aRaspberry Pi 3B with Stretch. You will likely need to install several dependencies to getMXNet to work.Refer to the following Build section for details.

Docker installation

Step 1 Install Docker on your machine by following the docker installationinstructions.

Note - You can install Community Edition (CE)

Step 2 [Optional] Post installation steps to manage Docker as a non-root user.

Follow the four steps in this dockerdocumentationto allow managing docker containers without sudo.

Build

This cross compilation build is experimental.

Please use a Native build with gcc 4 as explained below, higher compiler versionscurrently cause testfailures on ARM.

Docker Install Raspbian

The following command will build a container with dependencies and tools,and then compile MXNet for ARMv7.You will want to run this on a fast cloud instance or locally on a fast PC to save time.The resulting artifact will be located in build/mxnet-x.x.x-py2.py3-none-any.whl.Copy this file to your Raspberry Pi.The previously mentioned pre-built wheel was created using this method.

Install using a pip wheel

Your Pi will need several dependencies.

Install MXNet dependencies with the following:

Install virtualenv with:

Create a Python 2.7 environment for MXNet with:

You may use Python 3, however the wine bottle detectionexamplefor thePi with camera requires Python 2.7.

Activate the environment, then install the wheel we created previously, or install thisprebuiltwheel.

Test MXNet with the Python interpreter:

If there are no errors then you’re ready to start using MXNet on your Pi!

Native Build

Installing MXNet from source is a two-step process:

- Build the shared library from the MXNet C++ source code.

- Install the supported language-specific packages for MXNet.

Step 1 Build the Shared Library

On Raspbian versions Wheezy and later, you need the following dependencies:

Git (to pull code from GitHub)

libblas (for linear algebraic operations)

libopencv (for computer vision operations. This is optional if you want to save RAM andDisk Space)

A C++ compiler that supports C++ 11. The C++ compiler compiles and builds MXNet sourcecode. Supportedcompilers include the following:

- G++ (4.8 or later). Make sure to use gcc 4 and not 5 or 6as there areknown bugs with these compilers.

Install these dependencies using the following commands in any directory:

Clone the MXNet source code repository using the following git command in your homedirectory:

Build:

Some compilation units require memory close to 1GB, so it’s recommended that you enable swapasexplained below and be cautious about increasing the number of jobs when building (-j)

Executing these commands start the build process, which can take up to a couple hours, andcreates a filecalled libmxnet.so in the build directory.

If you are getting build errors in which the compiler is being killed, it is likely that thecompiler is running out of memory (especially if you are on Raspberry Pi 1, 2 or Zero, whichhaveless than 1GB of RAM), this can often be rectified by increasing the swapfile size on the Pibyediting the file /etc/dphys-swapfile and changing the line CONF_SWAPSIZE=100 toCONF_SWAPSIZE=1024,then running:

Step 2 Build cython modules (optional)

MXNet tries to use the cython modules unless the environment variableMXNET_ENABLE_CYTHON is set to 0.If loading the cython modules fails, the default behavior is falling back to ctypes withoutany warning. Toraise an exception at the failure, set the environment variable MXNET_ENFORCE_CYTHON to1. Seehere for more details.

Step 3 Install MXNet Python Bindings

To install Python bindings run the following commands in the MXNet directory:

Note that the -e flag is optional. It is equivalent to --editable and means that if youedit the sourcefiles, these changes will be reflected in the package installed.

Alternatively you can create a whl package installable with pip with the following command:

Docker Compose Install Raspbian

You are now ready to run MXNet on your Raspberry Pi device. You can get started by followingthe tutorial onReal-time Object Detection with MXNet On The RaspberryPi.

Note - Because the complete MXNet library takes up a significant amount of the RaspberryPi’s limited RAM,when loading training data or large models into memory, you might have to turn off the GUIand terminaterunning processes to free RAM.

To install MXNet on a Jetson TX or Nano, please refer to the Jetson installationguide.